Unfortunately, we were informed that the JOGL library has failed to get IP approved just like LWJGL due to severe licensing problems (see CQ2817 and GQ2840 for further details; IPZilla account needed). To summarize, LWJGL cannot be approved as it contains non BSD code or code with unknown license or provenance and JOGL cannot be approved as “we have been unable to locate an individual within Sun/Oracle to help us with JOGL”, as Janet Campbell wrote when closing the CQ. In this context we'd like to thank Janet and Barb for all their hard work!

What this means for Eclipse is that, even though it is technically possible to use 3D / OpenGL within Eclipse, there is no way to create self-contained Eclipse plugins that require OpenGL. Such plugins would always have to rely on external update sites, which complicates the installation process. Such update sites also depend on third party support and may or may not be available in future releases. For GEF3D, this is really bad news, but it also is a problem for all other 3D related projects.

The only option appears to be to create a new Eclipse project for OpenGL bindings. Some work has been done in the SWT project itself, but the code has since been abandoned. Unfortunately, writing OpenGL bindings for Java is not a simple job due to inconsistencies and driver incompatibilities. Jens wrote GEF3D in the context of his Ph.D. thesis, and we both were paid by the FernUniversität in Hagen. Additionally, we spent a lot of our spare time on GEF3D. We both are still interested in GEF3D, but unfortunately our current work is not really 3D related. That is, we both are no longer paid for maintaining GEF3D. This would be OK to a certain degree, but frankly none of us is keen on writing a new OpenGL wrapper library on our own without any salary.

So, we'd like to know whether we are alone in our effort to bring 3D to Eclipse: Do you want 3D (i.e. OpenGL) in Eclipse? And if you do, what are your thoughts on this situation? As this is not a simple yes/no question, please leave us a comment.

Jens and Kristian

Technical Note: OpenGL is required for almost all 3D applications, and high-level libraries such as the JMonkeyEngine or Aviatrix3D use OpenGL (i.e. LWJGL or JOGL) under the hood. Technically, OpenGL can be easily used in SWT applications thanks to the GLCanvas class. However, a Java wrapper library is needed for calling OpenGL functions. As far as we know, only two such libraries are available: LWJGL and JOGL. At http://www.eclipse.org/swt/opengl/, gljava is listed as well, however, this project is no longer maintained. The same is true for the org.eclipse.opengl bindings. There exists a plugin for JOGL, and another for LWJGL. The plugin for LWJGL has been written in the context of GEF3D and has been maintained by the LWJGL team for quite a while (they have changed their build system, so the LWJGL plugin is no longer maintained at the moment, however the update site is still available).

Monday, September 13, 2010

Wednesday, May 5, 2010

Multi editor 3D and property sheet pages

Besides enabling 3D editors, IMHO one of the most interesting features of GEF3D is to combine existing editors. I already blogged about that feature one year ago, and since then GEF3D has been improved very much.

The rendering quality is now as good as in 2D, so it really makes sense to think about supporting 3D when creating new editors. However, the nice thing about GEF3D is, that you do not have to write new editors from scratch, instead, you can reuse existing editors. And, these reused editors can not only adapted in order to support 3D -- we call this "3D-fied" -- but they can also be adapted in order to be combined. For that, all you have to do is to let them implement a small interface

When combining editors, a lot of new problems arise. One of these problems is how to create new edit parts. Every nested editor comes with its own factory -- but which one to use in case of newly created elements? This problem is solved in GEF3D for quite some time now (by the multi factory pattern). I only recently became aware of another "combination" problem: the property sheet page. Every editor provides its own page, created almost hidden via

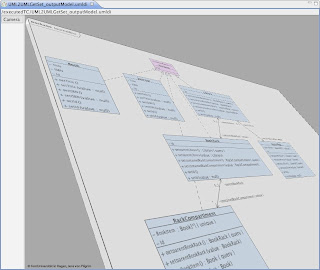

The screenshots show an UML use case diagram (visualized with 3D-fied UML tools) and an ecore diagram (visualized with 3D-fied Ecore tools). The 3D-fied editors and the multi editor combining them are examples of GEF3D (that is, you will find everything in the SVN).

In many cases models are visualized as diagrams, and with GEF3D and its multi editor feature you will be able to easily display all your diagrams in a single 3D scene -- with things you aren't able to visualize in 2D editors, such as inter-model connections (traces, markers, mappings, or other kind of weaving models). Some products use the term "modeling IDE" -- think about the "I" in IDE and what GEF3D does with your models ;-)

BTW: The very same concept used for implementing property sheet pages may be implemented for the Outlook View as well -- volunteers wanted :-D

Jens

The rendering quality is now as good as in 2D, so it really makes sense to think about supporting 3D when creating new editors. However, the nice thing about GEF3D is, that you do not have to write new editors from scratch, instead, you can reuse existing editors. And, these reused editors can not only adapted in order to support 3D -- we call this "3D-fied" -- but they can also be adapted in order to be combined. For that, all you have to do is to let them implement a small interface

INestableEditor. It is very simple, the GEF3D examples include 3D-fied and nestable versions of the UML2 Tools editors and the Ecore Tools editors.When combining editors, a lot of new problems arise. One of these problems is how to create new edit parts. Every nested editor comes with its own factory -- but which one to use in case of newly created elements? This problem is solved in GEF3D for quite some time now (by the multi factory pattern). I only recently became aware of another "combination" problem: the property sheet page. Every editor provides its own page, created almost hidden via

getAdapter(). Now -- what page do you want to use in case of a multi editor? The answer is simple: The right page, depending on the selected element. And this is what I've added to GEF3D: Depending on the currently selected element, the page provided by the nested editor responsible for that element should be used. The latest (SVN) revision of GEF3D provides a new page, called MultiEditorPropertySheetPage, which nests all the pages provided by the nested editors. Depending on the currently selected element, the appropriate page is shown, as you can see in the screenshots. As many things in GEF3D, this feature is almost transparent to the programmer.

|

|

In many cases models are visualized as diagrams, and with GEF3D and its multi editor feature you will be able to easily display all your diagrams in a single 3D scene -- with things you aren't able to visualize in 2D editors, such as inter-model connections (traces, markers, mappings, or other kind of weaving models). Some products use the term "modeling IDE" -- think about the "I" in IDE and what GEF3D does with your models ;-)

BTW: The very same concept used for implementing property sheet pages may be implemented for the Outlook View as well -- volunteers wanted :-D

Jens

Tuesday, February 2, 2010

Improving Visual Rendering Quality

As Jens mentioned in his recent blog post, parts of the Draw3D renderer have been rewritten in the past weeks. The initial motivation was to improve the visual rendering quality of 2D content (embedded GEF editors) and text, but during development it turned out that a lot of optimization would be necessary to keep the performance at acceptable levels. Eventually, I rewrote the renderer to take advantage of some advanced OpenGL features and now the performance is a lot better than it ever was.

In this blog post I will briefly explain how the 2D rendering system was redesigned over the course of GEF3D's existence and how it was possible to achieve both a gain in the visual quality and the rendering performance at the same time.

First, let me introduce the initial 2D rendering system that Jens designed before I came on board. GEF uses an instance of the abstract class Graphics to draw all figures. Actually, figures draw themselves using their paint method whose only parameter is an instance of Graphics (this is defined in the IFigure interface). The Graphics class provides a lot of methods to draw graphical primitives like lines, rectangles, polygons and so forth, as well as methods to manage the state of a graphics object. Usually, GEF passes an instance of SWTGraphics to the root of the figure subtree that needs redrawing. SWTGraphics uses a graphics context to draw graphical primitives, and the graphics context usually draws directly onto some graphics resource like an image or a canvas.

So what Jens did when he wanted to allow 2D content in GEF3D was that he simply passed an instance of SWTGraphics to the 2D figures that rendered into an image in memory. This image was then transferred to the graphics card and used as a texture. This system was very simple and required hardly any additional coding at all. The problem with this approach however is that whenever the 2D content needed redrawing (after some model change for example), the entire image had to be redrawn and uploaded to the graphics card again, which is a very costly process. First, the image has to be converted into a ByteBuffer and that buffer must then be uploaded from system to video memory through the bus. For normal-sized image, this can take up to 500ms.

To alleviate this problem, I wrote another Graphics subclass that uses OpenGL to render the 2D primitives directly into a texture image in video memory. This eliminates the uploading step and thus improved performance considerably, especially the delay after any model change when the texture image had to be uploaded into video memory. But it did not help with the second major problem: It still used textures. The problem with using textures to display 2D content in 3D is that while the texture image may look sharp and very good by itself, it gets blurry and distorted when it is projected into 3D space due to all the filtering that has to take place. Especially images that contain text become very hard to read in this approach, as you can see in this screenshot:

Another approach to rendering 2D content in 3D is not to use textures at all, but to render all 2D primitives directly into 3D space in every frame (so far, only the texture had to be redrawn only after a model change occurred). This eliminates all problems related to texture filtering and blurring once and for all. Combined with vector fonts (to be described in another blog post), direct rendering results in the best possible visual quality. The problem is that everything needs to be rendered in every frame all the time. I quickly discovered that simply sending all geometry data to OpenGL in every frame (this is also called OpenGL immediate mode) would kill performance - even in small diagrams, navigation became sluggish.

Essentially, the FPS in GEF3D are limited not by the triangle throughput of the video card (how many triangles can be rendered per second?), but by the bus speed (how much data can we send to the video card in a second?). If you send all your geometry, color and texture data to the video card on every frame, your performance will be very bad because sending large amounts of data to the video card is very expensive. The more data you can store permanently in video memory, the better your performance will be (until you get limited by triangle throughput). So we had to find a way to store as much data as possible in video memory and just execute simple drawing instructions on every frame.

Of course, OpenGL provides several ways to do this. The first and oldest approach is to use display lists, which is basically a way to tell OpenGL to compile a number of instructions and data into a function that resides in video memory. It's like a stored procedure that we can call every time we need some stuff rendered. The problem with display lists is that they are fine for small stuff like rendering a cube or something. 2D diagrams however consist of large amounts of arbitrary geometry, which cannot be compiled into display lists at all. So this approach was not useful for us.

The best way to store geometry data in video memory is called a vertex buffer object (VBO) in OpenGL. Essentially, a VBO is one (or more) buffer that contains vertices (and other data like colors and texture coordinates). These buffers only need to be uploaded into video memory once (or when some geometry changes) and can then be drawn by issuing as little as five commands in every frame. We decided to adopt this approach and try it for our 2D diagrams by storing the 2D primitives in vertex buffers in video memory. Rendering a 2D diagram would then be very fast and simple, because hardly any data must be sent to the video card per frame. This is how the pros do it, so it should work for us too!

In theory, that is correct. But in practice, it is very hard to actually create a vertex buffer out of the 2D content of a 2D diagram. Since a vertex buffer can only contain a series of graphical primitives (triangles, quadrilaterals, lines) of the same type and the primitives that make up the 2D diagram are drawn in random order, the primitives need to be sorted properly so that we can create large vertex buffers from them. Unfortunately, the primitives cannot simply be sorted by their type and then converted into vertex buffers because there are dependencies between such primitives that intersect. To cut a long story short, I had to think of a way to sort primitives into disjunct sets. Each set contains only primitives of the same type and each set should be maximal so that you end up with a small number of large buffers because that's how you achieve maximum performance.

The end result is impressive: We used to have performance problems with diagrams that contain more than 2000 2D nodes, and now we can display 4000 2D nodes at 120 FPS, and all that with much better visual quality. To get an idea of how much better the quality of the 2D diagrams is in this version, check out the following screenshot:

Kristian

In this blog post I will briefly explain how the 2D rendering system was redesigned over the course of GEF3D's existence and how it was possible to achieve both a gain in the visual quality and the rendering performance at the same time.

First, let me introduce the initial 2D rendering system that Jens designed before I came on board. GEF uses an instance of the abstract class Graphics to draw all figures. Actually, figures draw themselves using their paint method whose only parameter is an instance of Graphics (this is defined in the IFigure interface). The Graphics class provides a lot of methods to draw graphical primitives like lines, rectangles, polygons and so forth, as well as methods to manage the state of a graphics object. Usually, GEF passes an instance of SWTGraphics to the root of the figure subtree that needs redrawing. SWTGraphics uses a graphics context to draw graphical primitives, and the graphics context usually draws directly onto some graphics resource like an image or a canvas.

So what Jens did when he wanted to allow 2D content in GEF3D was that he simply passed an instance of SWTGraphics to the 2D figures that rendered into an image in memory. This image was then transferred to the graphics card and used as a texture. This system was very simple and required hardly any additional coding at all. The problem with this approach however is that whenever the 2D content needed redrawing (after some model change for example), the entire image had to be redrawn and uploaded to the graphics card again, which is a very costly process. First, the image has to be converted into a ByteBuffer and that buffer must then be uploaded from system to video memory through the bus. For normal-sized image, this can take up to 500ms.

To alleviate this problem, I wrote another Graphics subclass that uses OpenGL to render the 2D primitives directly into a texture image in video memory. This eliminates the uploading step and thus improved performance considerably, especially the delay after any model change when the texture image had to be uploaded into video memory. But it did not help with the second major problem: It still used textures. The problem with using textures to display 2D content in 3D is that while the texture image may look sharp and very good by itself, it gets blurry and distorted when it is projected into 3D space due to all the filtering that has to take place. Especially images that contain text become very hard to read in this approach, as you can see in this screenshot:

|

| TopCased editor, 3D version with 2D texture |

Essentially, the FPS in GEF3D are limited not by the triangle throughput of the video card (how many triangles can be rendered per second?), but by the bus speed (how much data can we send to the video card in a second?). If you send all your geometry, color and texture data to the video card on every frame, your performance will be very bad because sending large amounts of data to the video card is very expensive. The more data you can store permanently in video memory, the better your performance will be (until you get limited by triangle throughput). So we had to find a way to store as much data as possible in video memory and just execute simple drawing instructions on every frame.

Of course, OpenGL provides several ways to do this. The first and oldest approach is to use display lists, which is basically a way to tell OpenGL to compile a number of instructions and data into a function that resides in video memory. It's like a stored procedure that we can call every time we need some stuff rendered. The problem with display lists is that they are fine for small stuff like rendering a cube or something. 2D diagrams however consist of large amounts of arbitrary geometry, which cannot be compiled into display lists at all. So this approach was not useful for us.

The best way to store geometry data in video memory is called a vertex buffer object (VBO) in OpenGL. Essentially, a VBO is one (or more) buffer that contains vertices (and other data like colors and texture coordinates). These buffers only need to be uploaded into video memory once (or when some geometry changes) and can then be drawn by issuing as little as five commands in every frame. We decided to adopt this approach and try it for our 2D diagrams by storing the 2D primitives in vertex buffers in video memory. Rendering a 2D diagram would then be very fast and simple, because hardly any data must be sent to the video card per frame. This is how the pros do it, so it should work for us too!

In theory, that is correct. But in practice, it is very hard to actually create a vertex buffer out of the 2D content of a 2D diagram. Since a vertex buffer can only contain a series of graphical primitives (triangles, quadrilaterals, lines) of the same type and the primitives that make up the 2D diagram are drawn in random order, the primitives need to be sorted properly so that we can create large vertex buffers from them. Unfortunately, the primitives cannot simply be sorted by their type and then converted into vertex buffers because there are dependencies between such primitives that intersect. To cut a long story short, I had to think of a way to sort primitives into disjunct sets. Each set contains only primitives of the same type and each set should be maximal so that you end up with a small number of large buffers because that's how you achieve maximum performance.

The end result is impressive: We used to have performance problems with diagrams that contain more than 2000 2D nodes, and now we can display 4000 2D nodes at 120 FPS, and all that with much better visual quality. To get an idea of how much better the quality of the 2D diagrams is in this version, check out the following screenshot:

|

| Ecore editor 3D with high quality 2D content |

Friday, January 22, 2010

2.5D breaks free.. and future plans

I assume Kristian will tell you more in a future post, but I simply couldn't wait to show you this picture:

It shows a screenshot of the 3D-fied version of the ecore tools editor, which is part of the GEF3D examples. Compare this image (GEF3D rev. 436) with the following one, taken using an elder version (rev. 413 ) of GEF3D showing the very same diagram:

Do you see the difference? Yeah! 2D figures are no longer bound to their diagram plane! In other words: 2.5D breaks free! (If you don't know the term 2.5D, read our tutorial article about GEF3D available at Jaxenter.com!) Besides, the display quality of 2D content has improved since it is now rendered as vector graphics and no longer as a smudgy texture projected onto a plane. Usually, increased display quality leads to decreased speed, but not in this case! Actually, GEF3D is no longer a limiting factor when displaying large diagrams -- it's GEF (or GMF). The texture based version of GEF3D had a problem with diagrams containing about 5.000 (2D) nodes. The new version runs smoothly even with this much nodes! However, you may get a memory problem when opening such large diagrams (due to GEF/GMF), but if you can open it, the camera can be moved smoothly! Great work, Kristian! He has become a real 3D programming pro, and I'm absolutely impressed about how he improved GEF3D in the last weeks. Stay tuned to his post about this new technique!

At the moment, Kristian is working on also replacing the texture based font rendering with vector fonts, which will dramatically improve the overall quality of the rendered images. Besides, the GEF3D team has set up a todo list, summarizing bugs with new features (to be implemented in the near, not so near and far future):

The most important tasks are to add support for full 3D editing, e.g. moving and resizing figures in z-direction and rotation, implement advanced animation support and, depending on that, camera tracks. If you have ideas, please post an article on the GEF3D newsgroup!

I certainly have some bias, but with vector based 2D content (and fonts) and camera tracks (e.g., for positioning a diagram in a kind of 2D view), the quality and comfort of editing a 2.5D diagram will become the same as editing it with pure GEF in 2D. But with GEF3D, you can work with multiple diagrams much more comfortable: If you have to edit multiple diagrams with inter-model connections, you will be able to simply navigate to another diagram (and back again). And you can actually see the inter-model connections (for an example read Kristian's post about his 3D GMF mapping editor). 2D is dead, long live 3D!

Well, ok, I have probably watched too much Avatar 3D ;-). But maybe you like the idea of cool 3D diagrams, too? Then join the GEF3D team, grab yourself a bug and see how much fun 3D programming with GEF3D could be! Yes, I know... there is no release of GEF3D available yet... I will do that as soon as possible, and I hope with the help of Miles Parker we will be able to set up a build system shortly. So long, use the project team set and check out GEF3D from the SVN repository, an installation tutorial can be found in the Wiki.

Last but not least, I'm happy to announce the third and (so far) last part of our GEF3D article series in the german Eclipse Magazin, 2.10. In this part, Kristian and I explain how to 3D-fy existing GMF editors.

Jens

|

| GEF3D ecore editor (rev. 436) |

It shows a screenshot of the 3D-fied version of the ecore tools editor, which is part of the GEF3D examples. Compare this image (GEF3D rev. 436) with the following one, taken using an elder version (rev. 413 ) of GEF3D showing the very same diagram:

|

| GEF3D ecore editor, rev. 413 |

Do you see the difference? Yeah! 2D figures are no longer bound to their diagram plane! In other words: 2.5D breaks free! (If you don't know the term 2.5D, read our tutorial article about GEF3D available at Jaxenter.com!) Besides, the display quality of 2D content has improved since it is now rendered as vector graphics and no longer as a smudgy texture projected onto a plane. Usually, increased display quality leads to decreased speed, but not in this case! Actually, GEF3D is no longer a limiting factor when displaying large diagrams -- it's GEF (or GMF). The texture based version of GEF3D had a problem with diagrams containing about 5.000 (2D) nodes. The new version runs smoothly even with this much nodes! However, you may get a memory problem when opening such large diagrams (due to GEF/GMF), but if you can open it, the camera can be moved smoothly! Great work, Kristian! He has become a real 3D programming pro, and I'm absolutely impressed about how he improved GEF3D in the last weeks. Stay tuned to his post about this new technique!

At the moment, Kristian is working on also replacing the texture based font rendering with vector fonts, which will dramatically improve the overall quality of the rendered images. Besides, the GEF3D team has set up a todo list, summarizing bugs with new features (to be implemented in the near, not so near and far future):

The most important tasks are to add support for full 3D editing, e.g. moving and resizing figures in z-direction and rotation, implement advanced animation support and, depending on that, camera tracks. If you have ideas, please post an article on the GEF3D newsgroup!

I certainly have some bias, but with vector based 2D content (and fonts) and camera tracks (e.g., for positioning a diagram in a kind of 2D view), the quality and comfort of editing a 2.5D diagram will become the same as editing it with pure GEF in 2D. But with GEF3D, you can work with multiple diagrams much more comfortable: If you have to edit multiple diagrams with inter-model connections, you will be able to simply navigate to another diagram (and back again). And you can actually see the inter-model connections (for an example read Kristian's post about his 3D GMF mapping editor). 2D is dead, long live 3D!

Well, ok, I have probably watched too much Avatar 3D ;-). But maybe you like the idea of cool 3D diagrams, too? Then join the GEF3D team, grab yourself a bug and see how much fun 3D programming with GEF3D could be! Yes, I know... there is no release of GEF3D available yet... I will do that as soon as possible, and I hope with the help of Miles Parker we will be able to set up a build system shortly. So long, use the project team set and check out GEF3D from the SVN repository, an installation tutorial can be found in the Wiki.

Last but not least, I'm happy to announce the third and (so far) last part of our GEF3D article series in the german Eclipse Magazin, 2.10. In this part, Kristian and I explain how to 3D-fy existing GMF editors.

Jens

Tuesday, January 19, 2010

A Graphical Editor for the GMF Mapping Model

Everyone who has ever worked with GMF knows that it can be painful due to the steep learning curve and the tools it provides for editing the GMF models. The complexity of the models in combination with the very basic tooling makes learning and using GMF harder than it should be. Recently, efforts have been made to improve this situation. There is for example EuGenia, which was presented at the ESE 2009. EuGenia uses annotations to add information to the domain model so that the GMF GraphModel and MapModel can be generated automatically. For my diploma thesis however, I followed a different approach to improve the GMF tooling. Jens gave me the task to create a 3D graphical editor for the GMF mapping model. I would like to present the result of my efforts in this blog entry.

The basic concept for such an editor can be summed up by the following bullet points:

Thus, the first step was to develop graphical notations for the domain model, the GraphModel and the MapModel. The ToolModel was excluded to limit the amount of work, and it can be added at a later stage. I began by creating 2D GEF editors that simply display the aforementioned models. Obviously, with the Ecore Tools there already is a graphical notation and a very capable editor for EMF based domain models, so no work had to be done there.

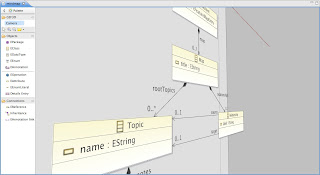

The main focus in creating a graphical notation for the GraphModel was to render the figures in the figure gallery just like they would look like in the generated editor. The diagram elements defined in the model should be grouped by their type (node, connection, diagram label and compartment) and the layout should be automatic, without the need for user interaction. The following screenshot demonstrates this.

Developing a graphical notation for the MapModel was a little more complex because it needs to convey more information than the notation for the GraphModel does:

As you can see in the above screenshot, every model element is represented using a figure that has a gray border and title bar. These figures are nested, and those figures that represent a mapping (like NodeMapping) display the referenced figure as their child. Additional information is displayed at the bottom of some of the figures in the form of a list of named properties. CompartmentMappings are nested into the figures to which they belong and contain the child references that link to the contained domain model elements.

With all the graphical notations in place, the next step was to combine those into a 3D multi editor. In order to do that, the 2D GEF editors had to be 3D-fied first. A 3D version of the Ecore Tools already exists as a GEF3D example, so that was just reused here. The other editors could be easily 3D-fied by adapting four classes each.

Combining the three viewers into a multi editor also is a pretty easy task with the tools that GEF3D already contains. There is an abstract base class (AbstractMultiEditor3D) for multi editors that allow any editor implementing the interface INestableEditor to be embedded. There are only very few things that need to be taken care of manually here, like for example making sure that all embedded editors use the same EMF ResourceSet to load their models. Once everything is in place, a multi editor is used by opening one of the models using the newly created editor and dragging the other models onto the editor window - easy! The result of this step is displayed in the following screenshot.

As you can see, this version of the editor already displays references from the MapModel to the other models using 3D connections. This has been implemented by using the UnidirectEditPart3D base class that comes with GEF3D. This class allows creating connections that are created by either the source or target edit part, which is exactly what was needed in this situation, since neither the domain model nor the GraphModel are aware of any references that a MapModel might have to their elements.

At this point, all that is missing from the editor is the actual editing functionality. It was decided to use a model transformation language to implement the editing functions (which always modify the MapModel in some way). This transformation language is called Mitra was created by Jens von Pilgrim for his Ph.D. thesis. Mitra is an acronym that stands for "Micro Transformations". The language is optimized for fine-grained, semi-automatic model transformations, as the name indicates. Since the Mitra rules that implement the editing functionality are triggered by drag and drop operations, a GEF tool is needed that can do this. Generally speaking, a drag and drop operation can also be interpreted as selecting a number of parameters for a rule. The model elements that are dragged are called source parameters while the model element which the elements are dropped on is a target parameter.

Consider the following example: Say you want to create a LinkMapping. For this you need a connection from the GraphModel and a domain element that represents the association in the domain model (this could be either an association class or a simple reference). For the sake of simplicity, let the association be modeled by a reference in the domain model. Now to create a LinkMapping, you can drag the connection from the GraphModel and drop it on the MapModel. This creates a new LinkMapping. To set the link feature, you would now drag the association from the domain model and drop it on the LinkMapping, etc.

There is one remaining problem however: How are the transformation rules selected? Currently, whenever an element is dropped, a popup menu is displayed that contains all rules defined for the editor. This is demonstrated in the following screenshot.

Obviously, this is not optimal, and there are plans to implement automatic rule selection by matching the parameter types against the rule signatures, but this is still on the todo list. But even if this is implemented, there will be cases in which there are multiple rules that match the parameter types, and in those cases, the rule name should be replaced by some more meaningful names in the menu.

Note that no references to the domain model are set - to see how this is done you can watch the next video:

Finally, the last video demonstrates how to create a ChildNodeReference inside an existing CompartmentMapping and a LinkMapping:

Kristian

The basic concept for such an editor can be summed up by the following bullet points:

- The editor should display the domain model, the GraphModel, the ToolModel and the MapModel together in a 3D view.

- The models should be visualized graphically, thus a graphical notation must be developed for each of them.

- References from the MapModel to the other models should be visualized using 3D connections.

- It should be possible to edit the MapModel entirely by dragging elements from the other models (or rather, their diagrams) to the MapModel. The user should not need to use any other methods to edit the MapModel.

- The editor should create as many elements of the MapModel automatically to assist the user.

Graphical Notations

Thus, the first step was to develop graphical notations for the domain model, the GraphModel and the MapModel. The ToolModel was excluded to limit the amount of work, and it can be added at a later stage. I began by creating 2D GEF editors that simply display the aforementioned models. Obviously, with the Ecore Tools there already is a graphical notation and a very capable editor for EMF based domain models, so no work had to be done there.

|

| Ecore Tools Editor |

The main focus in creating a graphical notation for the GraphModel was to render the figures in the figure gallery just like they would look like in the generated editor. The diagram elements defined in the model should be grouped by their type (node, connection, diagram label and compartment) and the layout should be automatic, without the need for user interaction. The following screenshot demonstrates this.

|

| Graphical Notation for the GraphModel |

Developing a graphical notation for the MapModel was a little more complex because it needs to convey more information than the notation for the GraphModel does:

- The MapModel mirrors the containment relationships of the domain model (using NodeReferences, NodeMappings and Compartments) which results in a hierarchical structure. This structure should be represented visually by nesting the figures which represent the model elements.

- The MapModel needs to display additional information like which domain element is mapped etc.

- The mappings should be represented by the figures which they map to in the GraphModel. That is, if a NodeMappping maps to a blue rectangle figure (in the GraphModel), then that NodeMapping should render itself as a blue rectangle also.

|

| Graphical Notation for the MapModel |

As you can see in the above screenshot, every model element is represented using a figure that has a gray border and title bar. These figures are nested, and those figures that represent a mapping (like NodeMapping) display the referenced figure as their child. Additional information is displayed at the bottom of some of the figures in the form of a list of named properties. CompartmentMappings are nested into the figures to which they belong and contain the child references that link to the contained domain model elements.

With all the graphical notations in place, the next step was to combine those into a 3D multi editor. In order to do that, the 2D GEF editors had to be 3D-fied first. A 3D version of the Ecore Tools already exists as a GEF3D example, so that was just reused here. The other editors could be easily 3D-fied by adapting four classes each.

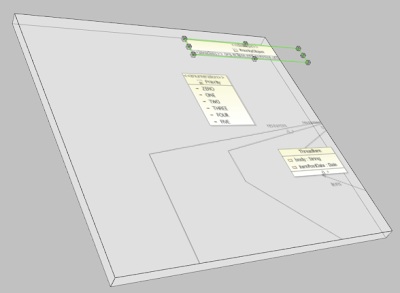

Multi Editor

Combining the three viewers into a multi editor also is a pretty easy task with the tools that GEF3D already contains. There is an abstract base class (AbstractMultiEditor3D) for multi editors that allow any editor implementing the interface INestableEditor to be embedded. There are only very few things that need to be taken care of manually here, like for example making sure that all embedded editors use the same EMF ResourceSet to load their models. Once everything is in place, a multi editor is used by opening one of the models using the newly created editor and dragging the other models onto the editor window - easy! The result of this step is displayed in the following screenshot.

|

| Multi Editor for the Mapping Model |

As you can see, this version of the editor already displays references from the MapModel to the other models using 3D connections. This has been implemented by using the UnidirectEditPart3D base class that comes with GEF3D. This class allows creating connections that are created by either the source or target edit part, which is exactly what was needed in this situation, since neither the domain model nor the GraphModel are aware of any references that a MapModel might have to their elements.

Dropformations

At this point, all that is missing from the editor is the actual editing functionality. It was decided to use a model transformation language to implement the editing functions (which always modify the MapModel in some way). This transformation language is called Mitra was created by Jens von Pilgrim for his Ph.D. thesis. Mitra is an acronym that stands for "Micro Transformations". The language is optimized for fine-grained, semi-automatic model transformations, as the name indicates. Since the Mitra rules that implement the editing functionality are triggered by drag and drop operations, a GEF tool is needed that can do this. Generally speaking, a drag and drop operation can also be interpreted as selecting a number of parameters for a rule. The model elements that are dragged are called source parameters while the model element which the elements are dropped on is a target parameter.

Consider the following example: Say you want to create a LinkMapping. For this you need a connection from the GraphModel and a domain element that represents the association in the domain model (this could be either an association class or a simple reference). For the sake of simplicity, let the association be modeled by a reference in the domain model. Now to create a LinkMapping, you can drag the connection from the GraphModel and drop it on the MapModel. This creates a new LinkMapping. To set the link feature, you would now drag the association from the domain model and drop it on the LinkMapping, etc.

There is one remaining problem however: How are the transformation rules selected? Currently, whenever an element is dropped, a popup menu is displayed that contains all rules defined for the editor. This is demonstrated in the following screenshot.

|

| Rule Selection Menu |

Obviously, this is not optimal, and there are plans to implement automatic rule selection by matching the parameter types against the rule signatures, but this is still on the todo list. But even if this is implemented, there will be cases in which there are multiple rules that match the parameter types, and in those cases, the rule name should be replaced by some more meaningful names in the menu.

Demonstrations

This first video shows how a TopNodeReference (including a NodeMapping, LabelMappings and CompartmentMappings) is created simply by dragging a Node from the GraphModel onto the MapModel.

Note that no references to the domain model are set - to see how this is done you can watch the next video:

Finally, the last video demonstrates how to create a ChildNodeReference inside an existing CompartmentMapping and a LinkMapping:

Kristian

Friday, January 15, 2010

GEF3D tutorial article in English language available

I'm happy to announce the English version of the GEF3D tutorial article "GEF goes 3D" (source code see here), available online now at JAXenter.com. The German version has been printed in the Eclipse Magazin 6.09 and is online available, too. This article is the first part explaining the basic concepts of GEF3D and how to "3d-fy" a very simple GEF-based editor. The second part about creating multi-editors is already available in German language (I will translate it as soon as possible), a third part about how to 3D-fy GMF-based editors will probably be published in the next issue of the Eclipse Magazin. A list of all GEF3D related articles and papers can be found at the GEF3D website. Thanks to Hartmut Schlosser for publishing the article online and Madhu Samuel for proof reading the english version.

BTW: Stay informed about latest GEF3D news by following GEF3D at twitter

Jens

BTW: Stay informed about latest GEF3D news by following GEF3D at twitter

Jens

Subscribe to:

Posts (Atom)