In this blog post I will briefly explain how the 2D rendering system was redesigned over the course of GEF3D's existence and how it was possible to achieve both a gain in the visual quality and the rendering performance at the same time.

First, let me introduce the initial 2D rendering system that Jens designed before I came on board. GEF uses an instance of the abstract class Graphics to draw all figures. Actually, figures draw themselves using their paint method whose only parameter is an instance of Graphics (this is defined in the IFigure interface). The Graphics class provides a lot of methods to draw graphical primitives like lines, rectangles, polygons and so forth, as well as methods to manage the state of a graphics object. Usually, GEF passes an instance of SWTGraphics to the root of the figure subtree that needs redrawing. SWTGraphics uses a graphics context to draw graphical primitives, and the graphics context usually draws directly onto some graphics resource like an image or a canvas.

So what Jens did when he wanted to allow 2D content in GEF3D was that he simply passed an instance of SWTGraphics to the 2D figures that rendered into an image in memory. This image was then transferred to the graphics card and used as a texture. This system was very simple and required hardly any additional coding at all. The problem with this approach however is that whenever the 2D content needed redrawing (after some model change for example), the entire image had to be redrawn and uploaded to the graphics card again, which is a very costly process. First, the image has to be converted into a ByteBuffer and that buffer must then be uploaded from system to video memory through the bus. For normal-sized image, this can take up to 500ms.

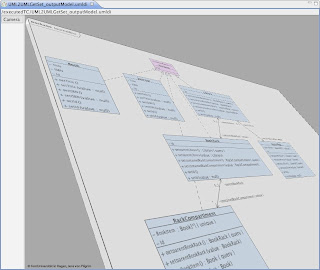

To alleviate this problem, I wrote another Graphics subclass that uses OpenGL to render the 2D primitives directly into a texture image in video memory. This eliminates the uploading step and thus improved performance considerably, especially the delay after any model change when the texture image had to be uploaded into video memory. But it did not help with the second major problem: It still used textures. The problem with using textures to display 2D content in 3D is that while the texture image may look sharp and very good by itself, it gets blurry and distorted when it is projected into 3D space due to all the filtering that has to take place. Especially images that contain text become very hard to read in this approach, as you can see in this screenshot:

|

| TopCased editor, 3D version with 2D texture |

Essentially, the FPS in GEF3D are limited not by the triangle throughput of the video card (how many triangles can be rendered per second?), but by the bus speed (how much data can we send to the video card in a second?). If you send all your geometry, color and texture data to the video card on every frame, your performance will be very bad because sending large amounts of data to the video card is very expensive. The more data you can store permanently in video memory, the better your performance will be (until you get limited by triangle throughput). So we had to find a way to store as much data as possible in video memory and just execute simple drawing instructions on every frame.

Of course, OpenGL provides several ways to do this. The first and oldest approach is to use display lists, which is basically a way to tell OpenGL to compile a number of instructions and data into a function that resides in video memory. It's like a stored procedure that we can call every time we need some stuff rendered. The problem with display lists is that they are fine for small stuff like rendering a cube or something. 2D diagrams however consist of large amounts of arbitrary geometry, which cannot be compiled into display lists at all. So this approach was not useful for us.

The best way to store geometry data in video memory is called a vertex buffer object (VBO) in OpenGL. Essentially, a VBO is one (or more) buffer that contains vertices (and other data like colors and texture coordinates). These buffers only need to be uploaded into video memory once (or when some geometry changes) and can then be drawn by issuing as little as five commands in every frame. We decided to adopt this approach and try it for our 2D diagrams by storing the 2D primitives in vertex buffers in video memory. Rendering a 2D diagram would then be very fast and simple, because hardly any data must be sent to the video card per frame. This is how the pros do it, so it should work for us too!

In theory, that is correct. But in practice, it is very hard to actually create a vertex buffer out of the 2D content of a 2D diagram. Since a vertex buffer can only contain a series of graphical primitives (triangles, quadrilaterals, lines) of the same type and the primitives that make up the 2D diagram are drawn in random order, the primitives need to be sorted properly so that we can create large vertex buffers from them. Unfortunately, the primitives cannot simply be sorted by their type and then converted into vertex buffers because there are dependencies between such primitives that intersect. To cut a long story short, I had to think of a way to sort primitives into disjunct sets. Each set contains only primitives of the same type and each set should be maximal so that you end up with a small number of large buffers because that's how you achieve maximum performance.

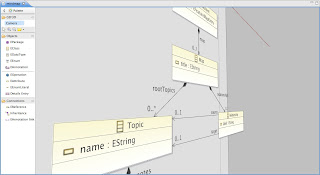

The end result is impressive: We used to have performance problems with diagrams that contain more than 2000 2D nodes, and now we can display 4000 2D nodes at 120 FPS, and all that with much better visual quality. To get an idea of how much better the quality of the 2D diagrams is in this version, check out the following screenshot:

|

| Ecore editor 3D with high quality 2D content |

No comments:

Post a Comment